Semantic-aware View Prediction for 360-degree Videos at the 5G Edge

Vats, Shivi and Park, Jounsup and Nahrstedt, Klara and Zink, Michael and Sitaraman, Ramesh and Hellwagner, Hermann

2022 IEEE International Symposium on Multimedia (ISM)

You can read the whole paper here.

Background

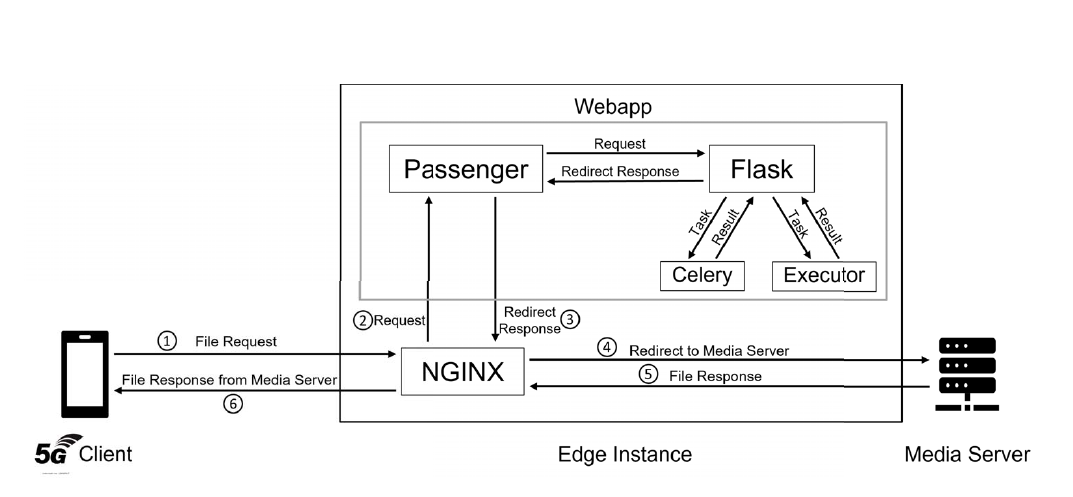

This paper was the culmination of 1.5 years of work on the 5G Playground Carinthia - Use Case “Virtual Realities”. I started work as a Project Assistant at my University halfway through the project’s duration. In my time there, I worked on a Python webapp using Flask, Celery, Redis, and Passenger to predict viewport for 360° videos in advance. The viewport algorithm utilised was SEAWARE, and my work involved porting the algorithm from MATLAB to Python using NumPy and SciPy and running the code in the webapp in real-time as the user watched the video. NGINX was utilised as the server to run the webapp on and stream the video through.

The other part of my work involved working on a DASH Android client for 360° videos. The work was tedious since there was minimal documentation available, but I reverse engineered the client’s algorithm and made modifications such that the client would request for new tiles whenever the user’s viewport changed and not only when a DASH segment ended. This ended up reducing the motion-to-glass latency by up to 62% compared to the regular streaming method.

Below is the architecture of the app I developed.

Abstract

In a 5G testbed, we use 360° video streaming to test, measure, and demonstrate the 5G infrastructure, including the capabilities and challenges of edge computing support. Specifically, we use the SEAWARE (Semantic-Aware View Prediction) software system, originally described in 1, at the edge of the 5G network to support a 360° video player (handling tiled videos) by view prediction. Originally, SEAWARE performs semanticanalysis of a 360° video on the media server, by extracting, e.g., important objects and events.

This video semantic information is encoded in specific data structures and shared with the client in a DASH streaming framework. Making use of these data structures, the client/player can perform view prediction without in-depth, computationally expensive semantic video analysis.

In this paper, the SEAWARE system was ported and adapted to run (partially) on the edge where it can be used to predict views and prefetch predicted segments/tiles in high quality in order to have them available close to the client when requested. The paper gives an overview of the 5G testbed, the overall architecture, and the implementation of SEAWARE at the edge server. Since an important goal of this work is to achieve low motion-toglass latencies, we developed and describe “tile postloading”, a technique that allows non-predicted tiles to be fetched in high quality into a segment already available in the player buffer.

The performance of 360° tiled video playback on the 5G infrastructure is evaluated and presented. Current limitations of the 5G network in use and some challenges of DASH-based streaming and of edge-assisted viewport prediction under “realworld” constraints are pointed out; further, the performance benefits of tile postloading are disclosed.

For more information, please read the paper here.

Thanks for reading!

Back to Academic Work

-

J. Park, M. Wu, K.-Y. Lee, B. Chen, K. Nahrstedt, M. Zink, and R. Sitaraman, “SEAWARE: Semantic Aware View Prediction System for 360-degree Video Streaming,” in Proc. IEEE Int.l Symposium on Multimedia (ISM), 2020, pp. 57–64. ↩